Most businesses generate large volumes of data through operations, transactions, and customer interactions. Yet converting that data into decisions, predictions, or automation remains a challenge. Traditional analytics tools provide historical summaries but fall short in supporting real-time actions or continuous improvement.

Machine learning development addresses this gap. It involves building systems that learn from data and adapt over time, enabling organizations to automate complex tasks, forecast trends, and improve operational efficiency at scale.

This blog walks you through the whole machine learning development process. You will learn about the primary types of machine learning, the tools used in modern workflows, and each stage of the development process. It also covers real-world applications, common challenges, and how to determine whether to build in-house or work with a dedicated team.

Key Takeaways

- Machine learning development has a step-by-step process. You start by preparing data, then train the model, put it into use, and keep it running well over time.

- The type of machine learning you choose should match your data availability and business objectives.

- Tools like TensorFlow, PyTorch, and Scikit-learn support different use cases and development stages.

- Building an internal team offers long-term control, while partnering with experts ensures faster delivery and reduced risks.

What Is Machine Learning Development?

Machine learning development means building systems that learn from data and improve over time. Unlike traditional software, these models adjust based on patterns they find in the data.

The process combines data science, coding, and domain knowledge. Teams select the appropriate data, train their algorithms, and continually refine the results to enhance performance.

Machine learning systems can lose accuracy over time if they are not maintained. This makes structured development, ongoing validation, and active monitoring essential for long-term performance.

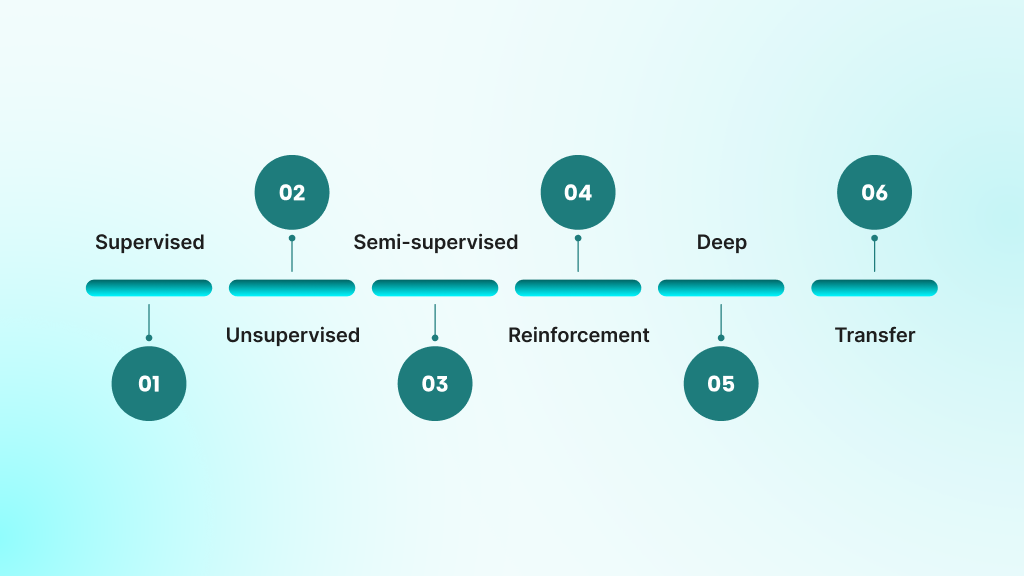

Types of Machine Learning Development

There are different ways to build machine learning models. Each works best for certain types of problems and data.

Choosing the correct method early helps the model run efficiently, utilize the appropriate amount of data, and align with real business needs.

Supervised Learning

Supervised learning trains a model using labeled data. That means each example in the dataset already has a correct answer. The model learns by comparing its guesses to the real outcomes and adjusting to improve accuracy.

Why it matters:

- Models improve quickly with clear feedback during training.

- You can track performance using simple metrics, such as accuracy and recall.

- Works well in business tasks like fraud detection and customer churn prediction.

Unsupervised Learning

Unsupervised learning works without labeled data. The model analyzes the dataset’s structure to identify patterns, clusters, or trends within it. It is often used when the goal is to explore the data or uncover something new.

Why it matters:

- Helps uncover hidden patterns that you may not have been aware of.

- Useful in early data analysis when labels are not available.

- Powers features like customer segmentation and anomaly detection.

Semi-supervised Learning

Semi-supervised learning uses a mix of labeled and unlabeled data. A small labeled set helps guide the learning process, while the larger unlabeled portion fills in the gaps. This method enhances performance without requiring the labeling of everything.

Why it matters:

- Cuts down time and cost spent labeling large datasets.

- Delivers better accuracy than unsupervised learning alone.

- Useful in fields such as healthcare or law, where labeling is time-consuming or costly.

Reinforcement Learning

Reinforcement learning trains models through actions and rewards. The system learns through trial and error, trying something, receiving feedback, and using that information to make better decisions over time.

Why it matters:

- Ideal for systems that need to adapt to changing environments.

- Supports long-term planning rather than one-time predictions.

- Used in robotics, dynamic pricing, and self-driving vehicles.

Deep Learning

Deep learning is a type of machine learning that uses multi-layered neural networks. These networks can process large amounts of unstructured data, such as images, sound, and text. They automatically learn which features are most important.

Why it matters:

- Reduces the need for manual feature engineering.

- Handles complex inputs, including speech, photos, and documents.

- Powers advanced systems like facial recognition and large language models.

Transfer Learning

Transfer learning begins with a model that is already trained on one task and then fine-tunes it for another. It saves time by utilizing what the model has already learned, rather than starting from scratch.

Why it matters:

- Requires less data and computing power.

- Speeds up development, especially when deadlines are tight.

- Works well for tasks like custom NLP, image tagging, or sentiment analysis.

Essential Machine Learning Tools and Technologies

Machine learning requires tools and frameworks that can process data, perform complex mathematical operations, and deploy models at scale. These tools help you build faster and more reliably by offering built-in functions, algorithms, and support systems.

The choice of tools has a significant impact on project outcomes because different frameworks excel in specific tasks and use cases. Let us examine some tools that can make your development process easier.

TensorFlow

TensorFlow is an open-source ML framework developed by Google. It is built for performance and supports production-scale workloads.

Best for:

- Large-scale model training and deployment.

- Multi-platform support (cloud, mobile, browser).

- Building custom deep learning pipelines.

Many teams choose TensorFlow for long-term projects that require scalability, reliability, and seamless integration with production systems.

PyTorch

PyTorch is known for flexibility and ease of use. It offers dynamic computation graphs, which make it ideal for research and experimentation.

Best for:

- Rapid prototyping of deep learning models.

- Academic research and experimentation.

- Projects that need intuitive debugging.

If you want to build and test models quickly, PyTorch is a strong choice, especially during early development stages.

Keras

Keras is a high-level API that runs on top of TensorFlow (and earlier, on Theano and PyTorch). It simplifies deep learning model creation.

Best for:

- Beginners in deep learning.

- Quick setup of neural networks.

- Clean and readable code for model building.

Use Keras when you need to build models fast without diving deep into low-level code.

Apache Spark MLlib

Apache Spark MLlib is the machine learning library within Apache Spark. It is designed for distributed computing and efficiently handles large datasets.

Best for:

- Processing large-scale data across clusters.

- Scalable ML pipelines in enterprise environments.

- Integration with big data systems like Hadoop.

MLlib is ideal when your datasets are too large for a single machine and you need parallel processing.

R Programming Language

R is built for statistical computing and data visualization. It is widely used in research-heavy environments.

Best for:

- Statistical modeling and data exploration.

- Academic or clinical research projects.

- Visualizing trends and relationships.

Use R when you need precision in statistical analysis or when data visualization is a key part of your workflow.

MLflow

MLflow is an open-source platform for managing the machine learning lifecycle. It supports tracking, packaging, and deploying models.

Best for:

- Tracking experiments and metrics.

- Managing model versions.

- Simplifying deployment to different environments.

MLflow is especially useful in team settings where model transparency and lifecycle management matter.

Jupyter Notebooks

Jupyter Notebooks offer an interactive coding environment. You can run code, write notes, and show visualizations, all in one file.

Best for:

- Sharing models and results with stakeholders.

- Exploratory data analysis.

- Writing clean, traceable code with context.

Jupyter is perfect for collaborative work, tutorials, or internal documentation of machine learning workflows.

Docker and Kubernetes

Docker and Kubernetes help you transition from development to production by creating consistent and scalable environments.

Best for:

- Packaging ML models in containers for deployment.

- Managing model infrastructure across servers.

- Running scalable, real-time prediction systems.

These tools become essential when your model is ready for production and needs to run reliably under different conditions.

Tip: Use TensorFlow for deep learning and scale. Choose PyTorch for flexibility and easier debugging. For classic ML tasks, go with Scikit-learn. Your choice depends on the data size, model type, team skills, and where you plan to deploy it.

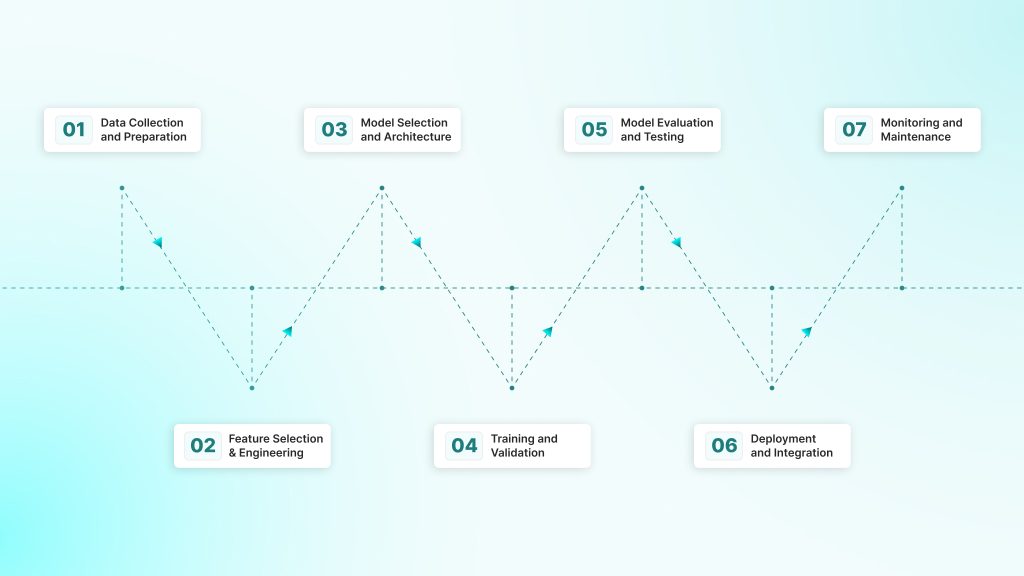

Step-by-Step Machine Learning Development Process

The machine learning development process follows a structured methodology that ensures maintainable AI solutions. This systematic approach reduces project risks while maximizing the likelihood of successful deployment.

Each step contributes to building a machine learning model that performs reliably in real-world conditions. Listed below are seven critical phases that build upon each other to create production-ready ML systems.

Step 1: Data Collection and Preparation

Start by identifying relevant data sources. These may include internal systems, such as customer records, transactions, or operational logs, as well as external datasets. Once collected, the data must be cleaned, structured, and validated. This includes addressing missing values, resolving inconsistencies, and ensuring uniform formats.

Prepared data must be accurate, complete, and aligned with the problem at hand. Model outcomes are only as strong as the quality of the input data.

Step 2: Feature Engineering and Selection

Transform raw data into features that the model can interpret. This includes deriving new variables from timestamps or text and encoding categorical values.

Once engineered, features must be evaluated. Remove repeated or unnecessary data and cut down the number of variables if there are too many. A clean set of features will help the model train faster and perform better.

Step 3: Model Selection and Architecture Design

Choose a model architecture that aligns with the business objective and performance expectations. Simple algorithms typically work well for tasks such as classification or forecasting. However, deep learning models are a better fit when you are working with images, audio, or language data.

Start by defining how the model fits into the larger system. Set up the data flow, manage outputs, and ensure it integrates seamlessly with your current platforms. Building early prototypes helps test your ideas and keep things simple before scaling up.

Step 4: Training and Validation

Train the model using labeled data to adjust internal parameters while minimizing error. Watch for signs of overfitting or underfitting. Then use validation methods to see how well the model performs on new, unseen data.

Refine training using hyperparameter tuning. This involves adjusting settings such as learning rate, tree depth, or batch size to enhance results. The goal is to build a model that works well in real use, not just during training.

Step 5: Model Evaluation and Testing

Evaluate the model’s performance using the appropriate metrics. Accuracy alone may not be enough. Use precision, recall, F1 score, or AUC for a better view.

Test the model with rare cases and unusual inputs. Try it on low-quality data too. Compare results with your current tools to see if it improves performance.

Step 6: Deployment and Integration

Prepare the trained model for deployment in your production setup. Set up the proper infrastructure, build APIs or batch pipelines, and ensure it works well with existing systems.

Next, plan for growth by adding load balancing and managing resources. Maintain stable performance through regular monitoring and fine-tuning to ensure optimal performance. Secure the system with access controls, data encryption, and version tracking to ensure data integrity and security.

Step 7: Monitoring and Maintenance

Monitor the model regularly after deployment. Changes in data, behavior, or systems can reduce their performance. Use monitoring tools to track key metrics and send alerts when performance drops.

Retrain the model as needed to reflect changes in data and evolving business objectives. Keep clear records of model versions, data updates, and settings. Strong long-term results depend on consistent oversight and maintenance.

Benefits & Challenges of Machine Learning

Machine learning can enhance decision-making, increase efficiency, and facilitate personalization. But to work well, it needs a strong foundation.

It also brings technical, organizational, and compliance challenges. You need to address these early to ensure long-term success.

Before making significant investments or design choices, it is essential to understand both the benefits and the risks.

Benefits of Machine Learning Development

Machine learning provides a set of practical advantages that directly impact how businesses operate, compete, and scale.

- Improved Decision Accuracy: ML models analyze large datasets to uncover patterns that support faster and more informed business decisions. These systems reduce reliance on guesswork and enable consistent forecasting across departments.

- Process Automation: Tasks that previously required domain experts, such as quality control or document review, can now be automated using machine learning. This increases output capacity while reducing error rates.

- Personalized User Experiences: ML systems allow companies to serve millions of users with tailored content, recommendations, and workflows. These models learn from user behavior and continuously adapt, improving customer engagement and satisfaction over time.

- Operational Efficiency and Cost Control: Machine learning helps identify bottlenecks, optimize workflows, and reduce unnecessary spend across departments. It enables more precise resource allocation and often leads to measurable savings in both time and cost.

- Stronger Competitive Positioning: Organizations that adopt machine learning early often outperform competitors tied to traditional systems. The ability to predict, respond, and adapt faster becomes a core strategic differentiator in data-intensive industries.

Challenges of Machine Learning Development

While the benefits are substantial, successful machine learning initiatives depend on addressing key operational and technical constraints.

- Dependence on High-Quality Data: ML models require clean, complete, and representative datasets. Inaccurate or biased data leads to models that fail in production, produce misleading results, or reinforce existing errors.

- Specialized Skill Requirements: Building and maintaining ML systems requires expertise in data science, algorithm development, infrastructure, and domain knowledge. Many teams struggle to hire or retain qualified professionals.

- Infrastructure and Resource Demands: Training and deploying models often involve significant compute power, data storage, and system integration. These requirements can raise the cost and complexity of implementation, especially for mid-sized teams.

- Compliance and Transparency Risks: ML systems must align with evolving standards around data privacy and auditability. Meeting these requirements involves documenting and regularly reviewing how models make decisions.

Applications of Machine Learning in Business

Machine learning helps solve business problems that older systems struggle with. It enhances speed, accuracy, and flexibility in areas such as customer service and financial risk management.

The examples below demonstrate how businesses utilize machine learning to analyze data and make informed decisions.

Customer Support Automation

Customer service teams often struggle with repeated queries and long wait times. Machine learning removes this burden by automating the first layer of support.

How it helps:

- Auto-sorts tickets based on issue type or urgency.

- Generates instant replies for common questions.

- Escalates complex cases to human agents when needed.

This leads to faster responses, reduced workload, and consistent service quality, even during high-volume periods.

Fraud Detection and Prevention

Old fraud systems used fixed rules that attackers could learn and bypass. Machine learning now enables more intelligent and faster fraud detection.

How it helps:

- Learns from transaction patterns in real-time.

- Flags unusual behavior that may signal fraud.

- Adapts constantly to new fraud tactics.

As a result, threats are caught early, and businesses stay ahead of evolving risks.

Supply Chain Optimization

Forecasting demand used to rely on averages, which failed during disruptions. Machine learning brings precision by reacting to real-world signals.

How it helps:

- Analyzes live data like weather, transit delays, and demand spikes.

- Improves stock planning and delivery accuracy.

- Cuts excess inventory and avoids stockouts.

This leads to a more flexible, data-driven supply chain.

Predictive Maintenance

Scheduled maintenance often resulted in wasted time or unexpected breakdowns. Machine learning changes this with more brilliant timing.

How it helps:

- Tracks real-time sensor data to detect early warning signs.

- Predicts failures before they occur.

- Schedules service only when needed.

This approach improves equipment uptime and cuts repair costs.

Marketing and Sales Intelligence

Generic campaigns waste budget and miss the mark. Machine learning turns scattered customer data into sharp insights.

How it helps:

- Segments audiences by behavior, location, or interests.

- Personalizes content and timing to match user intent.

- Automatically optimizes campaigns based on real-time results.

This boosts engagement, increases conversions, and improves ROI.

Credit Risk and Financial Assessment

Fixed scoring systems miss new risks and lack flexibility. Machine learning updates risk models in real time.

How it helps:

- Combines multiple data sources, including repayment history and digital behavior.

- Detects risky patterns early.

- Speeds up credit decisions without lowering accuracy.

Lenders gain better visibility and can respond faster to market shifts.

Healthcare Diagnostics

Manual diagnosis relies on the skill and time of the clinician, which can vary significantly across individuals. Machine learning adds accuracy and support.

How it helps:

- Analyzes large sets of medical data to spot patterns.

- Supports the early detection of diseases.

- Gives doctors decision support based on accurate data.

This improves treatment quality and reduces diagnostic errors.

Human Resource Optimization

Hiring and retention often rely on guesswork and incomplete data. Machine learning brings structure to talent decisions.

How it helps:

- Screen resumes and interviews to find strong candidates.

- Predicts turnover risks using internal data.

- Supports workforce planning with long-term insights.

It lowers hiring costs and helps build more stable, high-performing teams.

Develop or Partner? How to Approach Machine Learning Implementation

When implementing machine learning, businesses must choose between building an internal development team or working with a specialized partner. This decision directly impacts time-to-market, cost, and the success of the final solution.

Building In-House Capabilities

Building internally offers long-term control and deep organizational knowledge. However, it requires a significant upfront investment and lengthy timelines.

- Recruitment demands are high: Attracting qualified ML engineers, data scientists, and MLOps specialists is both time-consuming and costly.

- Infrastructure needs are complex: Companies must set up scalable environments for training, testing, and deployment.

- Results take time: Most organizations take 12 to 24 months to establish a functional, self-sufficient ML team.

This path is best suited for companies with a consistent ML roadmap, existing technical depth, and the ability to absorb the costs and time required to build from scratch.

Partnering with a Dedicated Development Team

For businesses with immediate needs, limited internal bandwidth, or high implementation risk, partnering with an experienced ML team is often the better route.

- Faster delivery: External teams already have the tools, pipelines, and processes to move quickly.

- Lower risk: Established teams reduce the trial-and-error phase by applying frameworks that have already proven effective at scale.

- Built-in expertise: Domain-aware teams understand business constraints, compliance, and integration challenges.

This approach helps companies deploy functional, production-ready systems without overloading internal teams or delaying time-sensitive initiatives.

Why DEVtrust Fits This Role

DEVtrust offers a proven model for businesses that want to move with speed and confidence.. The team brings extensive experience delivering machine learning solutions across healthcare, finance, logistics, and education. They manage the full lifecycle, from data preparation to deployment, while aligning each system with fundamental business objectives.

- What DEVtrust delivers:

- Predictive analytics platforms

- Intelligent document processing tools

- Custom conversational AI systems

- Smooth integration with existing tools and workflows

- What they work with: DEVtrust leverages platforms such as OpenAI GPT-4, Azure OpenAI, Google Vertex AI, and LangChain, tailoring each solution for optimal scalability and performance.

DEVtrust focuses on solving business-critical problems using reliable AI and machine learning solutions that work in production, meet compliance standards, and create measurable ROI.

Conclusion

Machine learning development plays a crucial role in building systems that address real-world problems, enhance decision-making, and support long-term business objectives. It requires structured execution, the right tools, and a clear understanding of where value is created.

DEVtrust helps businesses implement machine learning with confidence, from planning through deployment and maintenance.

Reach out to us if you want to turn your machine learning plans into fully operational systems that deliver results.

Machine Learning Development: Complete Guide

Uncover the machine learning development stages, from data prep to deployment. Gain insights into feature engineering and model evaluation. Click now!

Contact Us